AI Ethics: Navigating the Fine Line Between Innovation and Responsibility

Introduction

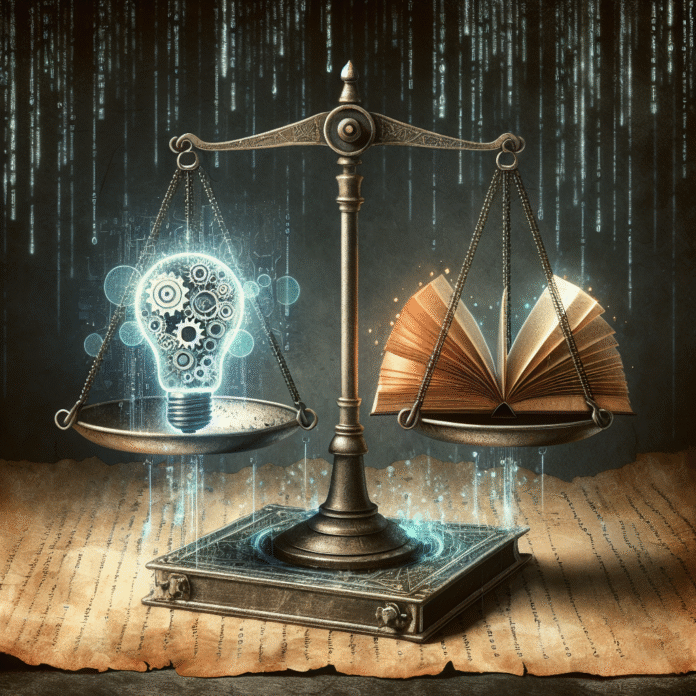

Artificial Intelligence (AI) has become a cornerstone of modern technology, driving innovation across industries. From healthcare to finance, AI promises unprecedented efficiency and insights. However, this rapid advancement raises critical ethical questions that demand our attention. Striking a balance between innovation and responsibility is not just a necessity but an imperative for sustainable development.

The Importance of Ethical AI

As AI systems become more integrated into our daily lives, ethical considerations are paramount. Issues such as bias, privacy, and accountability pose significant challenges. For example, biased algorithms can perpetuate discrimination, while data privacy breaches can undermine public trust. Thus, the importance of ethical AI cannot be overstated; it is essential for ensuring fairness and transparency in technology.

Key Areas of Concern

1. Bias and Fairness

One of the most pressing ethical dilemmas in AI is bias. AI systems learn from historical data, which may contain inherent biases. If unaddressed, these biases can lead to unfair treatment of individuals based on race, gender, or socioeconomic status. Developers must prioritize fairness by implementing checks and balances to ensure equitable outcomes.

2. Privacy and Data Protection

The use of personal data in AI training raises significant privacy concerns. Consumers are increasingly aware of their data rights, prompting calls for stricter regulations. Companies must navigate these complexities by ensuring data protection and obtaining informed consent from users. Ethical frameworks should guide the responsible use of data.

3. Accountability and Transparency

As AI systems make decisions that affect people’s lives, accountability becomes crucial. Who is responsible when an autonomous vehicle malfunctions, or when a healthcare AI misdiagnoses a condition? Developers and organizations must provide transparency in AI operations, allowing stakeholders to understand how decisions are made and who is accountable.

Moving Towards Responsible AI

To foster a culture of ethical AI, stakeholders must collaborate. Policymakers, developers, and ethicists should work together to create robust guidelines that promote ethical practices. Companies should prioritize ethical training for their teams, ensuring that ethical considerations are woven into the development process from the start.

Conclusion

As we stand on the brink of an AI-driven future, navigating the fine line between innovation and responsibility is paramount. We have the opportunity to harness AI’s potential while adhering to ethical standards that ensure fairness, privacy, and accountability. By committing to responsible AI practices, we can build a future that benefits everyone—without compromising our ethical principles.